- #INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK PIP PYSPARK HOW TO#

- #INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK PIP PYSPARK INSTALL#

- #INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK PIP PYSPARK SOFTWARE#

It provides high-level APIs for developing applications using any of these programming languages.Īpache Spark has a versatile in-memory caching tool, which makes it very fast. Spark makes it easier for developers to build parallel applications using Java, Python, Scala, R, and SQL shells. It achieves higher performance for both batch and streaming data using a DAG scheduler, an efficient query optimizer, and a physical execution engine.

#INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK PIP PYSPARK INSTALL#

#INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK PIP PYSPARK HOW TO#

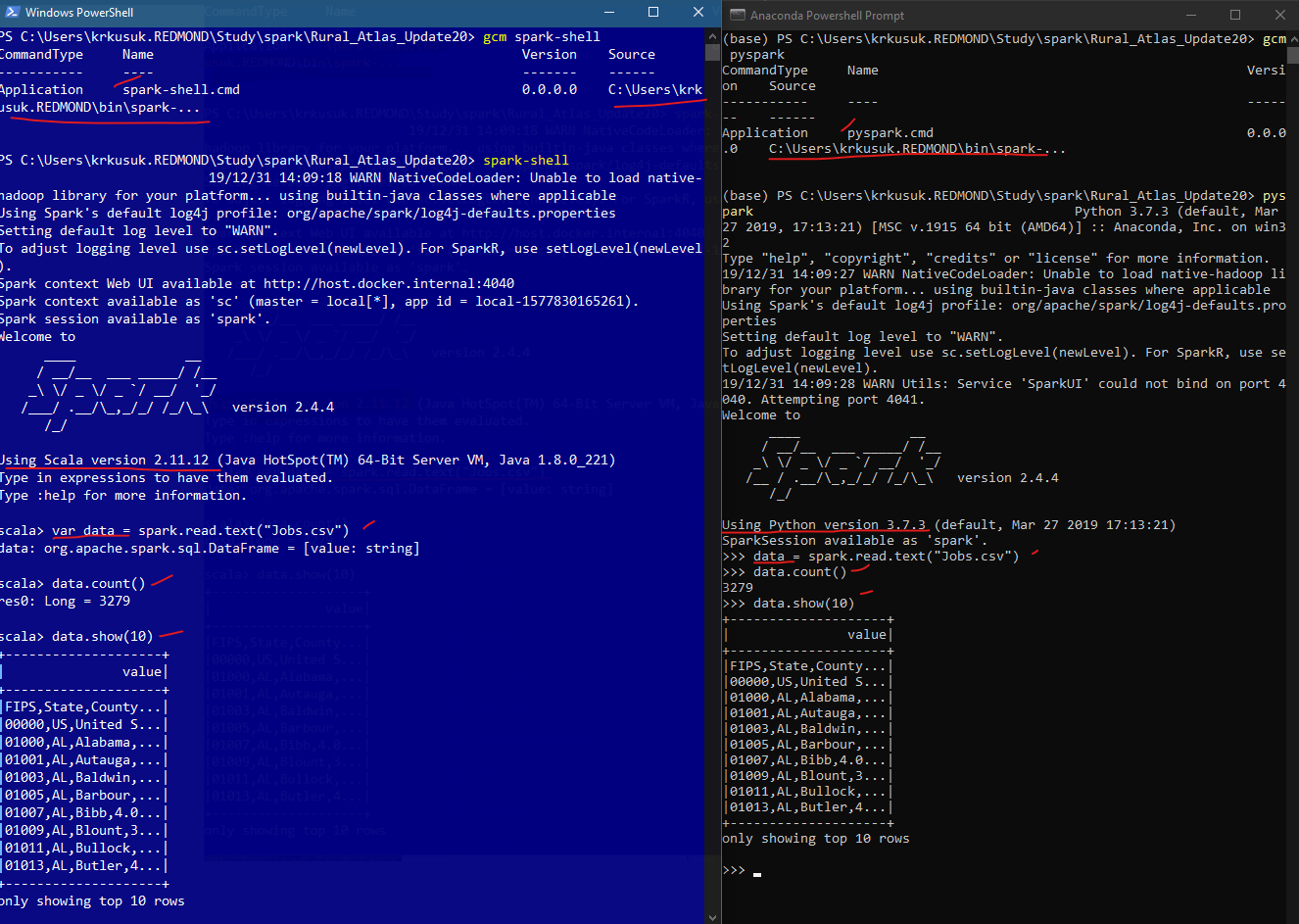

In my future posts, I will write more about How to use Docker for Data science. To learn more about Docker, please follow this link on YouTube – # stop the containerĪnd to remove the container permanently run this command. To stop the container either use the Docker Desktop App or run the following command. You have successfully installed Pyspark on your machine. If everything installed correctly, then you should not see any problem running the above command. Then run the following command to start a pyspark session. Now, let’s test if Pyspark runs without any errors. This should open jupyter notebook in your web browser. Then copy the address that is shown in the PowerShell and paste it in the web browser and hit enter. docker run -p 8888:8888 jupyter/pyspark-notebook Then run this command in the PowerShell to run the container. Once the image is downloaded, you we will see the Pull complete message and inside the Docker Desktop App you will see the Pyspark Image. Now, we need to download the Pyspark image from the docker hub which you can find here – Ĭopy the Docker Pull command and then run it in windows PowerShell or Git bash. Once installed, you will see a screen like this. Then Double click on the Docker Desktop installer to install it.

So Let’s see how to install pyspark with Docker.įirst, go to the website and create a account. Understanding how to use Docker is also a very important skill for any data scientist, so along the way, you will also learn to use it which an added benefit.

#INSTALL SPARK ON WINDOWS JUPYTER NOTEBOOK PIP PYSPARK SOFTWARE#

It does not matter what hardware and software you are using, if the Application built with Docker runs on one machine then it is guaranteed to work on others as everything that is needed to run an application successfully is included in the Docker containers. So what works on one machine does not guarantees that it will also work on other machines. The methods that are described by many articles have worked on some machine but does not have worked on many other machines because we all have different hardware and software configuration. Some of the main issues with installing Pyspark on windows are related to Java like Py4jError and others. I tried almost every method that has been given in various blog posts on the internet but nothing seems to work.

I have been trying to install Pyspark on my windows laptop for the past 3 days. Installing Pyspark using Docker – Why using Docker to install Pyspark? In this post, I will show you how to install Pyspark correctly on windows without any hassle. If you are struggling to install Pyspark on your windows machine then look no further.

0 kommentar(er)

0 kommentar(er)